Intro

At least once a week I am either directly asked to @-mentioned into a question about why Invoke-RestMethod and/or ConvertFrom-Json don’t play nice with the pipeline. I decided to put this into a blog post so I can just point people to it in the future.

Note that this blog entry was written while PowerShell 6.0.2 was current and 6.1.0 was on the way. This behavior may change in future versions.

This Issue

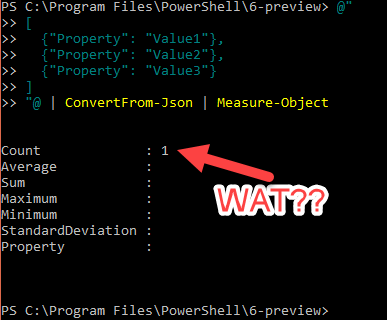

The issue is best demonstrated with some code:

Result:

That’s odd. The GitHub issue endpoint returns a page size of 30 by default. The manually created JSON obviously has more than 1 object.

It’s clear that something weird is going on here. If you run the command without the pipe to Measure-Object, you see all the objects just fine. Also, if you save the results to a variable, you can access the objects from the indexers:

Result:

Wat?

The Cause

Normally, cmdlets and functions will “unroll” collections. This means that when a function or cmdlets sends a collection type to the pipeline, instead of the next command in the pipeline receiving the raw collection, it receives the enumerated items in that collection one at a time.

Take this simple example:

Result:

You can see that instead of Measure-Object receiving a single collection object, it received the three member items of that collection.

This is properly called collection enumeration. I prefer to call it “unrolling” because I liken it to breaking up a roll of coins.

The relevant lines of code that cause this are here for ConvertFrom-Json and here for Invoke-RestMethod. Both cmdlets use the WriteObject(Object) method to send the object to the pipeline. By default this does not unroll collections. To do that, they would need to use the WriteObject(Object, Boolean) method and pass true to the second parameter (would you like to know more?).

The Reason

It’s all very well and good to know what piece of code is doing this, but why? What possible sense does this make? Just about everything else in PowerShell does not behave like this. Coming across this behavior is almost always shocking and unexpected.

Well, I’m here to explain that there is a good reason for this behavior. Mind you, I was not around when these cmdlets were being designed, so I cannot speak to intent. But, I can speak to the reasons why I believe this is the correct behavior, even though it is unexpected.

Serialization Parity

Valid JSON can only ever be a single object. That object can be one of several types: string, number, null, true, false, array, or object (key/value pair). Yes, you can do all kinds of nesting insides arrays and key/value pairs, but the entire JSON construct is only ever a single parent object. This is important because the outer type may matter when you are deserializing.JSON into PowerShell. My next command in the pipeline may need to know if the original JSON was an array with a single key/value pair or just a single key/value pair:

This:

versus this:

If the cmdlets unrolled the collection, the next command in the pipeline could not tell the difference.

Paging and Multiple JSON Sources

A new feature in PowerShell 6.0.0 was added to Invoke-RestMethod to allow following of next links on APIs. This allows for really easy paging. Lets say I want to get the second page of issues from GitHub. I could do this:

Result:

If the collection from the resulting JSON deserialization was unrolled, I would not be able to distinguish items on page 1 and items on page 2 and the count would be 60 for all items on both pages.

Likewise, I may not be able to do the following:

Result:

With the collections unrolled, I would have no way to retrieve the second JSON object from the collection of JSON objects (I promise I’m not trying to be confusing here).

Should That Really Be Default?

Sure, we need to be able to maintain serialization parity and provide a way to access the objects consistent with how they were created. But, does it make sense to do this by default?

For a long time I thought that the current behavior should be the default behavior. However, after being asked about this behavior week after week for months, I’m convinced that I’m either the only person or one of very few who see it that way. It appears that most users do not care about the serialization parity and just want to be able to pipe from these commands like everything else.

Though, I will argue that it is already this way with other serialization commands.

Result:

Some try to compare the CSV cmdlets, but they are kind of a special case. They are not really serializing/deserializing. More accurately, they are creating a CSV from a list of objects and converting a CSV to a list of objects. Parity only works there in limited cases. For example:

Result:

Weird, right? The Array contents are lost and all that survives is the object metadata. Not a great way to serialize data.

But, I give in. On Issue #3424, the PowerShell Committee Agreed that an RFC should be drafted to change the default behavior and add the option to revert. This needs to be simultaneously updated on Invoke-RestMethod and ConvertFrom-Json so they can work similarly. This work is up for grabs if anyone wants to draft the RFC and do the PR. I would be happy to guide you through the process, should you be interested.

Workarounds

In the meantime, here are a few workarounds to tide you over.

Assignment

Assign the results to a variable and then the variable will be collection you can use normally:

ForEach-Object

You can also pipe the results to ForEach-Object and then return the object to the pipeline, which will unroll the collection for the next command:

Parenthesize

You can also wrap the commands in parentheses. This “squeezes” the result up as a kind of temporary variable before passing to the pipeline:

Conclusion

I hope now it’s clear why this funky behavior is the way it is. I hope I have also provided some decent workarounds.